Whose Evaluation Is It, Anyway?: Outsourcing Teacherly Judgement

An unpopular thing about me is I always basically enjoyed marking. Like, not the grading part — I always struggled with numerating the denominator and I just don’t believe there’s a meaningful difference between a B- essay and a C+ one in the honest-to-goodness real world — but the marking part? Entering into discourse with my students? From a time perspective, it always damn near killed me, but I genuinely always enjoyed it.

That said, I’ve always worked in roles where I basically had the freedom to eschew a lot of the parts of evaluation that didn’t make sense to me, like taking attendance (I am bad at enforcing it and also don’t care) and participation (I don’t know how to evaluate your engagement unless I exclusively use metrics, like hand-raising and speaking in class, that would have wildly disadvantaged me as a student) and late penalties (see attendance). I can see how if you had to spend a lot of time with a practice that was alien from your own values about what “mattered” in the classroom, that would grind you down over time. That inability to act within your own values as a teacher is no small thing: as we’ve talked about around these parts before, it leads to moral stress and burnout.

But is automation the answer?

Teaching is Relational (Obviously)

The first time I got all het up about the outsourcing of teacherly judgement was way back in Digital Detox 2021, when I took exception to the labelling of students that tools like Microsoft Habits engages in. This idea that an analytic tool would be use to shape how faculty interact with their students never sat right with me. Now that we know Microsoft is investing in ChatGPT, it makes me wonder how supercharged these judgements are going to be by AI composition tools — like will Habits now draft a notification about problematic behaviour to your students without your input? Will it have learned enough about your composition style from your hours spent in Word (this might be a good moment to note I draft all the Detox posts in Scrivener) to even sound like you?

I guess this is a time saver, but it also makes me wonder about what the point is of education anyway. Next week, we’re going to talk about what happens when AI starts marking AI (thanks, Robot Wars, I hate it), but today I am mostly interested in thinking about putting AI in the position of evaluating people; We know that learning is deeply relational and that how we feel about where we are and the people we work with directly impacts our learning. Now, it’s a mistake to put the teacher at the centre of this relationship: learners establish deep bonds with classmates and even with the institution and the material, which also impacts learning (in fact, connection to the material is actually what that famous James Comer quotation, “No significant learning can occur without a significant relationship,” is all about). But even recognizing that, I struggle with thinking through what it means to remove the teacher from the equation when it comes to assessment and evaluation.

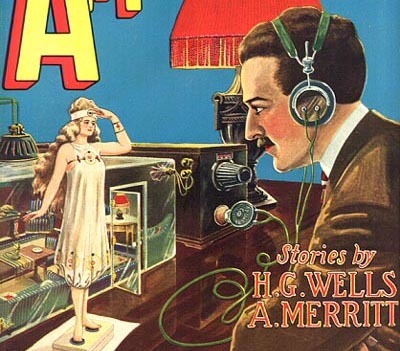

The truth is, education as a system has been trying to remove the teacher from the equation for a shockingly long time. Indeed, when we think back to the emergence of teaching machines first under Sidney Pressey and later under BF Skinner (and for this I really must insist that you read Audrey Watters’ book Teaching Machines: The History of Personalized Learning, which is by a wide margin the most engaging book on edtech ever penned). The interest in making learning into something objective and monitored by machines was very much fuelled by the panic around women entering the teaching profession: once they began to outnumber the men, the question of how such irrational creatures would ever manage to be objective evaluators was a big issue. The benefit of a machine is that it would be unbiased.

There’s that old chestnut. Automation bias! Like Slim Shady, it’s back again. We want to believe that machines make decisions objectively and therefore better than humans. And especially feeble humans. You know. Women.

Of course, the other thing about the machine is that it has no idea the extent to which a learner has developed before coming to the machine; it doesn’t know what the learner overcame to get to class; it doesn’t know the learner’s intentions, priorities, or goals. I’m pretty bummed out about the idea of education without those factors taken into account.

Anyway, as Watters more than demonstrates in Teaching Machines, that dream of the automated and thus perfectly objective (BRB, LOL’ing forever) teachers is still alive and well. It’s present in the pursuit of tools to mechanize learning. But it’s also major fodder in the current culture war. When the need to be “objective” as teachers is the reason why library shelves are empty in Florida, maybe we need to reconsider what objectivity in the classroom can or should look like. If oppressions are happening around us, neutrality isn’t neutral: it’s oppressive. I am not sure how many times we need to learn that lesson.

Whose Neutral Is Neutral?

Have you asked ChatGPT a political question? Everyone thinks it’s in the tank for the other guys. It pointedly refuses to answer specific questions about its own politics, assuring users that it does not “have the ability to hold political beliefs or leanings.” Some uses suggest that its answers fall basically on an Establishment Liberal political orientation (by American standards so… Centre-Right to Canadians, Right to Europeans, etc). It aligns with big business but feels kinda bad about it, basically. ChatGPT is down today as a I write this post, but what I find more fascinating than whether its normative answers lean left or right is what it will refuse to answer under the guise of being too political. In my play with it, it has told me on a number of occasions that it cannot weigh in on questions of social justice, feminism, religion, and so on. It’s coyness now is in stark opposition to when it first launched and was all too willing to make a racist or sexist joke when prompted. But, in general, OpenAI has avoided a disaster on the scale of Microsoft’s NaziBot of a few years back.

In the pursuit of neutral, however, ChatGPT falls into the same trap as modern media: bothsidesism and false equivalences. We’ve talked about algorithmic bias in these pages, but we also need to think of the biases of those who seek to fix it: establishment capitalism isn’t in the business of truth, justice, or equality. It’s in the business of minimizing boat-rocking. We know, for example, that Apple programmed Siri to never say “feminism” and to deflect questions on gendered topics and “remain neutral.” But some of what Siri is deflecting there is discussion of sexual harassment and #MeToo. What is neutral in that context?

There is always judgement in the execution of an algorithm. As we implement them in education, we need to ask who the neutral we strive for is actually serving or if we should be pursing neutrality at all.

Sometimes Right Answers Aren’t Objective

It’s a truth universally ignored by people who want to sell you a new technology that learning is messy and hard to measure, and sometimes we can’t even correctly identify learning within ourselves in the moment. Mostly, we measure knowledge acquisition and certain objects or artifacts of the learning process, because that’s all we can do. From that we infer what learning has or hasn’t taken place. But how many times have you aced an exam and then immediately forgotten everything you learned (I’m looking at you, PSYC 3250: Motivation and Emotion)? How many times have you destroyed body and soul to turn out an essay that then fell flat with the instructor, even though you know you learned a ton in the process of writing it (oh POLI 2300: Canadian Political Institutions, why break a gal’s heart do)? Indeed, one of the big reasons why ungrading continues to gather steam as a movement is because of the imperfection of grades a system for evaluating learning.

But what’s easy to measure is compliance: whether you followed the instructions. What all automated evaluation schemes measure — from a Skinner teaching machine to Microsoft Habits — is compliance. When a learning analytic tool tells you that a student is well-positioned for success in the course, it bases that judgement on things like how often they log in to the learning platform, how many things they click around on, how long they spend with a window open. Those things might correlate with learning. They also might not. Who knows? But it looks nice on a chart, so we get a report.

The most obvious analogues are the kinds of surveillance reports that tools like Office 365 are also happy to generate about you as a worker. (And remember, even if your institution does not use this as a metric: you can turn off the reporting, not the recording. The data is somewhere.) How many words did you type, how many meetings did you attend, and what percentage of the working day is your mouse moving? These sure are measures of… something. But does it equate to a measure of your skill and competency as an employee? Hm. I wonder.

There are places for evaluating compliance, certainly, that do connect to larger aims of education. There are important, life-or-death reasons for compliance. I need to know that my nurse knows how to put in my IV correctly, that my home inspector knows how to consult the building code efficiently, that my accountant understands the finest points of their ethical code of contact. But most of learning — even the processes required to achieve those competencies — aren’t well-suited to compliance metrics. And while compliance metrics are a part of most courses of education, they aren’t themselves education. Obviously there are a million definitions of education, but I’m partial to Lawrence A. Cremin’s, circa 1976:

Education is the deliberate, systematic, and sustained effort to transmit, provoke or acquire knowledge, values, attitudes, skills or sensibilities as well as any learning that results from the effort.

Public Education, p. 27. I found this quotation in an old photocopied excerpt of sources unknown, tucked in my old paper files from way back when we did the first Detox. Thanks to this excellent book chapter for helping me to flesh out the citation properly.

We can’t comply our way into values, attitudes, and sensibilities, and we can only partially assess knowledge and skills through compliance. Learning is something more, something harder. And understanding the learning that takes place is often a function of the relationship between the learner, the educator, the class as a whole, and the material being learned. There’s no ticky box to assess it.

My radical opinion is this: teacherly judgement matters in the evaluation of learning. For one thing, only the instructor and the students know the ins-and-outs of what has been taught in the classroom, even it we don’t always perceive the whole picture of what we’re doing in the same way. For another, only the instructor and the students know what the journey of learning has been within that classroom — this is also why standardized tests limit educational possibilities, because they result in having to teach to the test instead of following that journey. There’s no algorithm that can stand in for a holistic assessment of a learner, and even as scale presses us to seek out those solutions, we can at least refuse to accept the facade that these kinds of evaluations are meaningful.